Managing BigQuery slots effectively is about more than just scaling up capacity.

It requires analyzing workloads, predicting demand, and applying rule-based governance to strike the balance between cost predictability and performance.

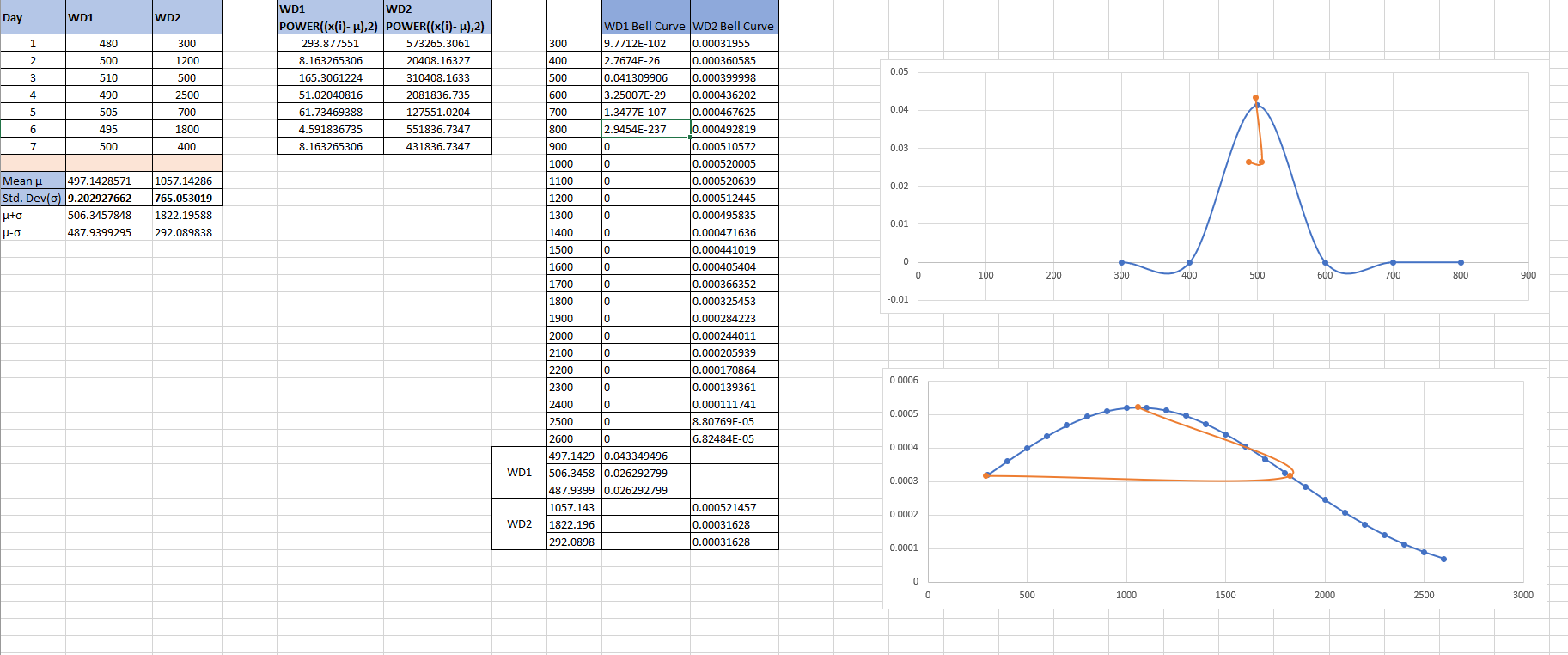

Step 1: Recognizing Different Workload Behaviors

Not all workloads are alike. Some run with clockwork regularity, consuming a steady number of slots every day. Others stay quiet most of the time, only to surge suddenly and demand far more than expected.

This creates two clear categories:

- Stable workloads → predictable, low variance (low stddev), good candidates for committed slots.

- Bursty workloads → High variance (High stddev), spiky, better left to autoscale.

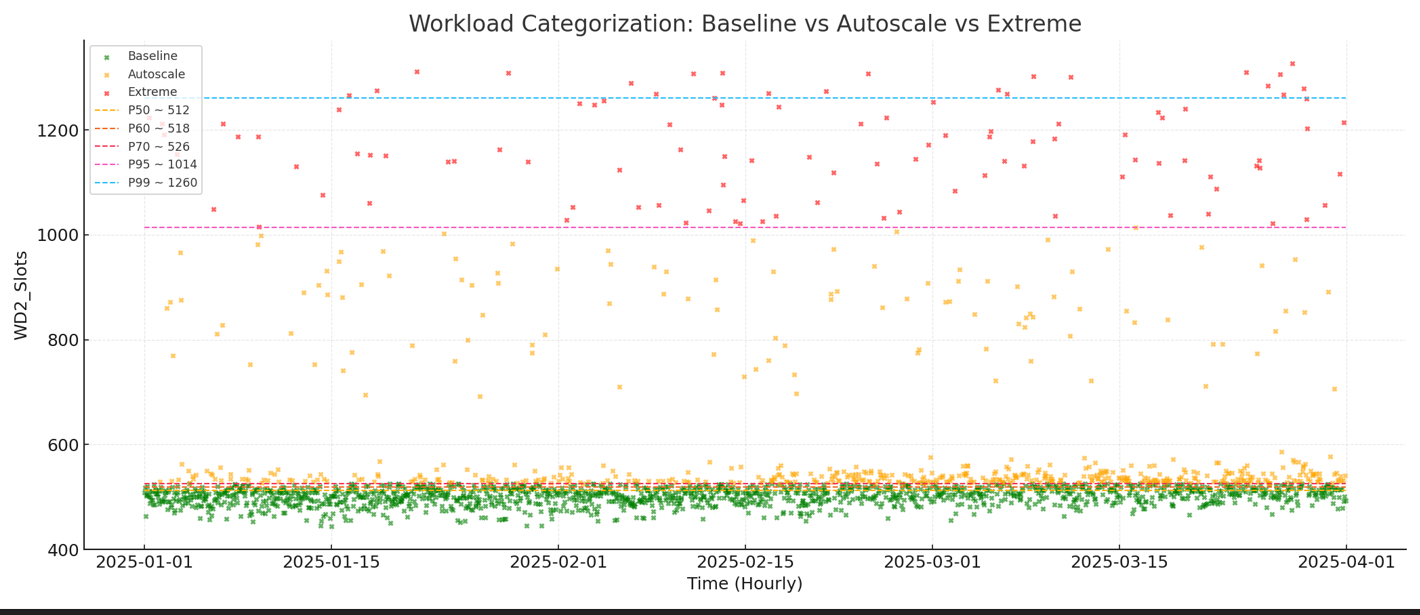

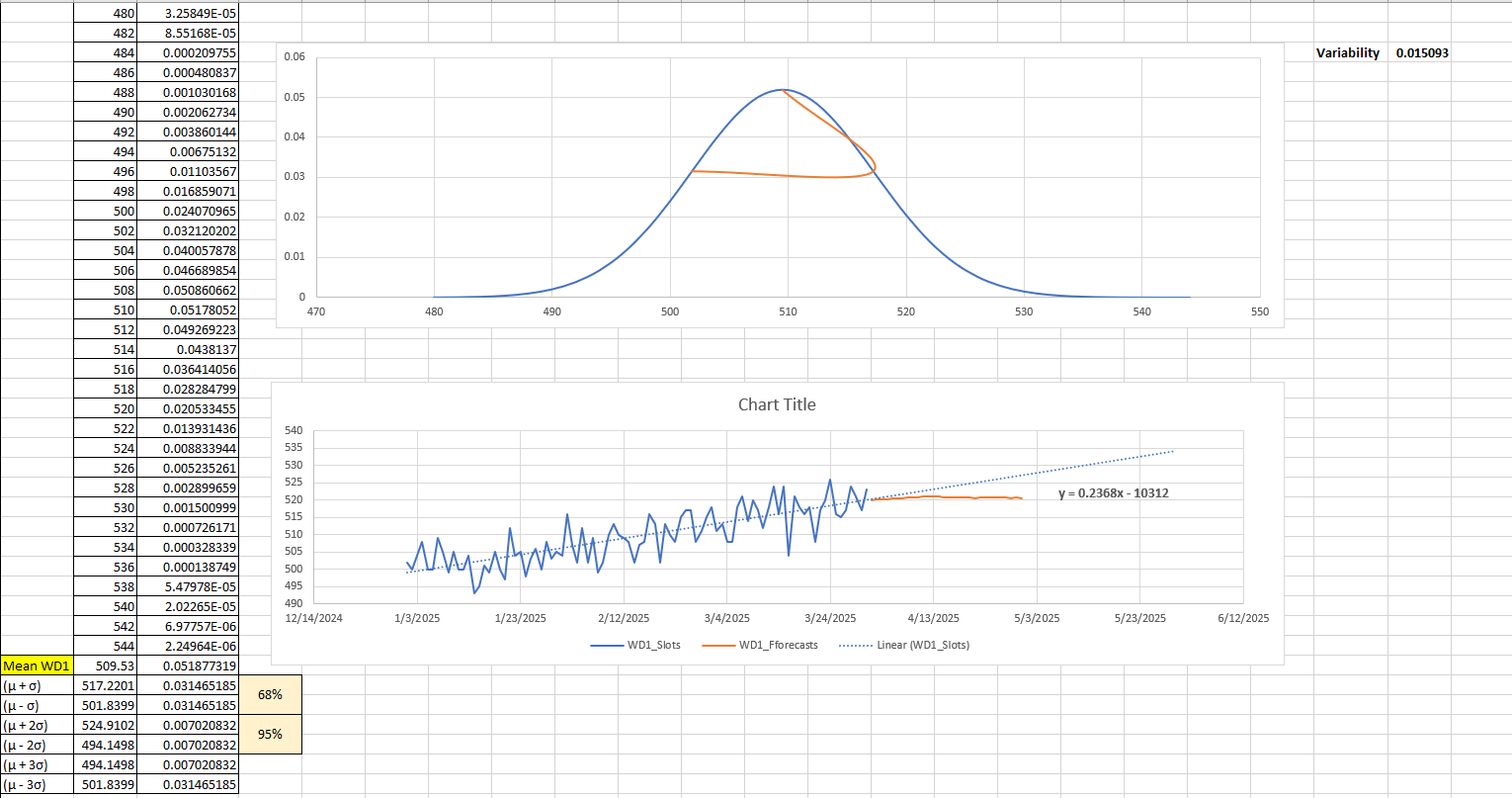

Step 2: Defining the Baseline

Once workloads are classified, the next step is to define a baseline: the steady capacity that can safely be committed without fear of waste.

Instead of guessing, organizations can use percentile-based thresholds:

- Baseline commitments around the 60th–70th percentile of historical usage.

- Autoscaling to cover peaks up to the 90th–95th percentile.

- Extreme spikes above the 99th percentile are tolerated with autoscale only.

This ensures the majority of usage is locked in at a discount, while peaks remain flexible.

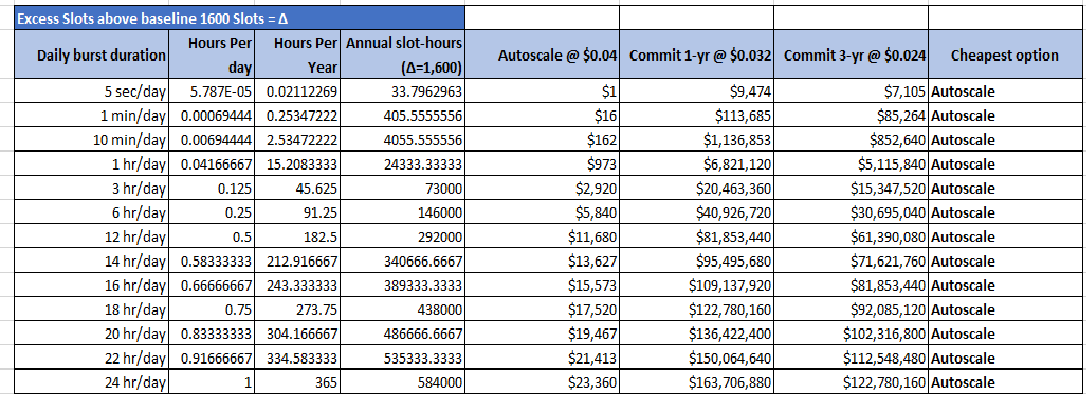

Step 3: Handling Bursts

Bursts are where costs can spiral. But not all bursts are created equal.

- Short-lived bursts (minutes or a few hours per year) → keep them on autoscale, since the total cost is negligible.

- Sustained bursts (hundreds or thousands of hours) → these add up quickly and are worth folding into the baseline.

The key is to measure both duration and frequency. A burst that happens every day for hours is very different from one that happens once a month for minutes.

Step 4: Adding Governance

Technology decisions are only half the story — governance makes the system sustainable.

Policies and rules keep costs predictable without constant human oversight:

- Quotas to prevent runaway queries.

- Idle slot alerts to reassign unused capacity across teams.

- Materialized views and partitioned tables to reduce unnecessary data scans.

- Scheduled query staggering to prevent multiple heavy jobs from clashing at the same time.

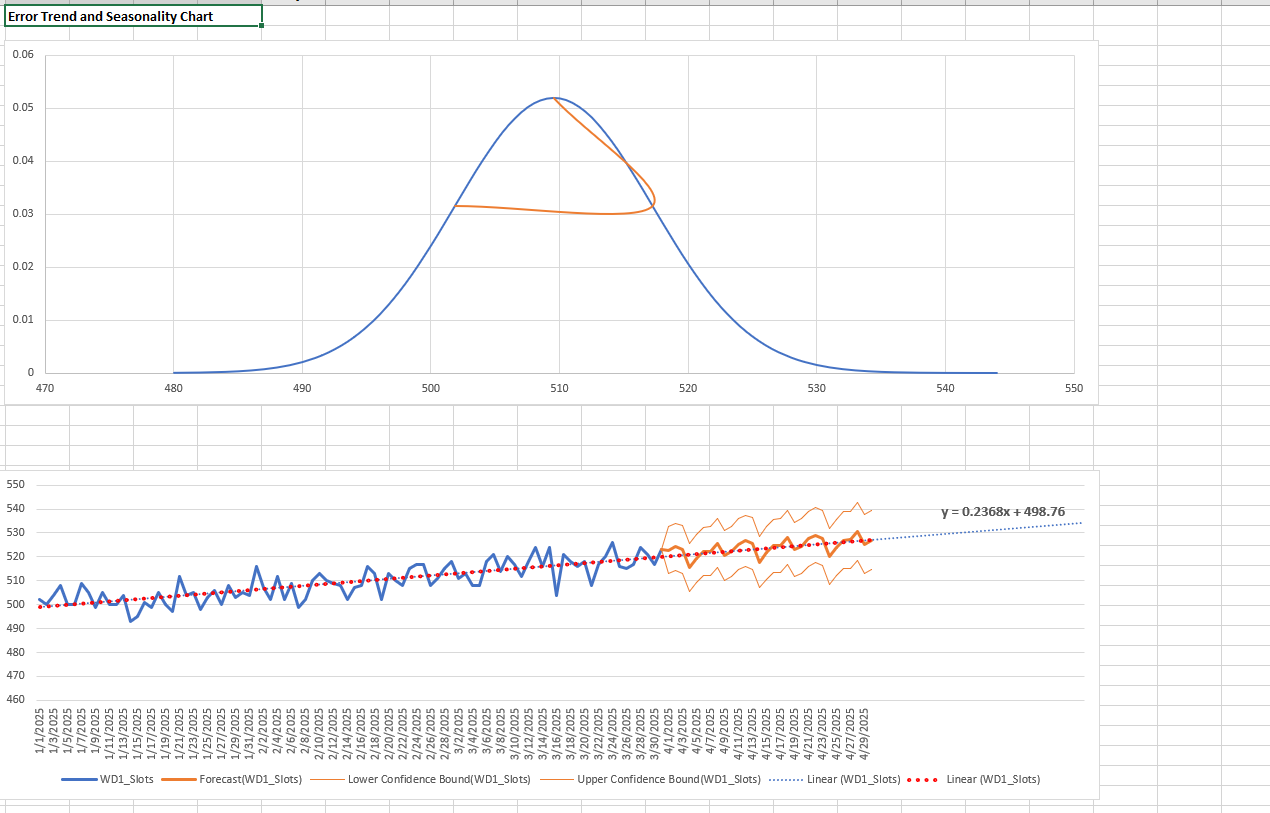

Step 5: Forecasting for Growth

Capacity isn’t static. Data pipelines expand, new use cases appear, and demand grows steadily over time.

Using time-series forecasting, organizations can project future slot demand and adjust commitments accordingly. Adding a a calibrated buffer for new workloads keeps the system resilient without overcommitting.

Regular reviews — every quarter or even monthly — ensure that baseline commitments evolve with usage.

Step 6: Translating into Economics

At the end of the day, this all comes down to cost.

- Committed slots: up to 40% cheaper with 3-year agreements.

- Autoscale: more expensive per slot-hour, but only billed when needed.

- Extreme spikes: absorbed flexibly, ensuring no performance trade-offs.

This balance gives finance predictable budgets while giving engineering the elasticity to handle any workload.

Conclusion

BigQuery capacity management is not just a technical exercise — it’s a strategic lever for cost predictability, operational resilience, and scalable growth.

This structured, rule-based approach offers:

- Predictable spend through well-defined commitments.

- Elastic performance by leveraging autoscaling for variability.

- Governance and forecasting that keep the system adaptive to business change.

While this framework provides a strong foundation, it should be continuously refined and adapted to each organization’s unique workload patterns. In doing so, leaders can align engineering execution with financial accountability, ensuring that BigQuery remains both cost-efficient and enterprise-ready.

It’s an evolving space!!